Posted Fri, 4 Mar 2011, 5:02pm by Shawn D. Sheridan

This series of blog posts originally appeared as a white paper I wrote a number of years ago. Nonetheless, the content is still relevant today, and useful to anyone in the software engineering business, be it commercial software producers, or in-house development shops.

Background

A number of years ago, on my first day of my new job as vice president of the software development team of a leading financial institution, as I was being introduced to my peers in the operations area, one of the supervisors in the customer relations call centre came hustling down the isle towards my new boss and I, a look of frustration on his face, exclaiming, “The whole system is down! We can’t answer any calls!”

With a sinking feeling in the pit of my stomach, I knew that it was my system that was down. And 250 people couldn’t respond to the queries of the firm’s customers until it was back up. And it was my accountability. And it was my first few hours on the job. It could only go up from here!

What happened? Well, my brand new team had implemented into production one of the weekly releases the weekend before (yes, I said weekly), and it had failed. It took some scrambling that morning, with me frankly looking on helplessly and doing my best to stay out of the way of the people who really needed to do the work. But it was clear to me that there were problems with the quality of the software that my new team was producing, and that it was costing the company money and customer good-will. And that wasn’t good.

Three years later, we were down to five releases in the year. The first release of that year — a substantial one effecting 200 server-side modules and six large user-interface modules — saw five defects discovered in user-acceptance test, which we fixed very quickly, completing the rest of the testing successfully and without incident.

And in production, not one defect surfaced.

We had no downtime, we continued to serve our customers without interruption, my business partners were happy because they got what they wanted, it worked, and it wasn’t like pulling teeth to get there. And my team was happy because they had done a good job, no one had to fight any fires, they worked at a reasonable pace throughout the release, no one was stretched to breaking, and their business partners were happy with them and not yelling at them any more.

What changed? Well, believe it or not, it wasn’t more testing — not in the traditional sense of functional, end-of-SDLC testing. Instead, over a three-year period, I was able to make enough inroads and bit-by-bit changes to the way we designed and built the software in the first place (up-front practices) so that by the time we got to testing, it was in very good shape.

Over that three-year period, all those little changes amounted to a significant departure from the way things had been done, in both my shop, and in how we interacted with our business partners in the rest of the firm. Our business partners bought in to the changes, because they eventually saw that they got what they wanted, the way they wanted, working, sooner than what they used to get, which was something that wasn’t really what they wanted, and maybe it worked, but chances are it really didn’t, even if the system didn’t crash.

The metrics cited in these posts that follow are ones that I have implemented in my career to help me manage my software engineering processes, and gain the kind of achievements as illustrated in my anecdote above. Without these kinds of measures, it is extraordinarily difficult to determine where to focus, let alone build a business case for making changes. However, given the state of software engineering in most shops, making change is critical.

I base those conclusions on a number of premises:

- Management is accountable for long-term share- and stake-holder value. All levels of management in all areas of the enterprise must keep this in mind. That includes software engineering management.

- Thus, consistent production of quality software products and services at an appropriate gross-margin (price less cost-to-make) contributes toward achievement of long-term share-holder value. Conversely, poor software quality costs money immediately, deteriorates organisational reputation, and will lead to the eventual demise of the producing organisation.

- You cannot manage what you are not measuring. Without metrics that are defendable, you will wind up managing based on hear-say, speculation, and emotion.

- The most economical approach to dealing with defects is not to create them in the first place. Failing that, the second-most economical approach to defects is to find and fix them as close as possible to when they are created.

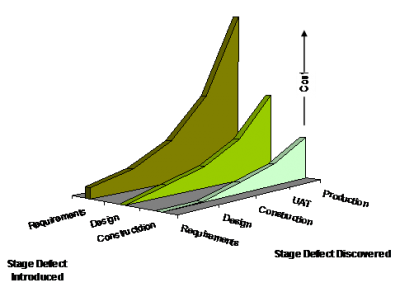

- Given point #4, system functional and regression testing and later in the Software Development Life Cycle (SDLC) is too late to find defects. Fixing them at or after this point in time is exorbitantly expensive (see Figure 1)

Figure 1: Cost to fix defects increases exponentially as the point where they are discovered becomes further and further away from where they were introduced., is rarely done properly, is seldom reflected in all work-products where the fix should be reflected (such as documentation on design and requirements that should be altered to align with the fix so that teams coming along afterwards to further enhance that area of the system have accurate designs from which to work), and leads to a legacy of fragility in the software product, with the associated increase in future costs.

Figure 1: Cost to fix defects increases exponentially as the point where they are discovered becomes further and further away from where they were introduced., is rarely done properly, is seldom reflected in all work-products where the fix should be reflected (such as documentation on design and requirements that should be altered to align with the fix so that teams coming along afterwards to further enhance that area of the system have accurate designs from which to work), and leads to a legacy of fragility in the software product, with the associated increase in future costs. - The vast majority of companies manage defect tracking, analysis, reporting, and introduction-rate-reduction very poorly, as evidenced by a number of industry metrics. For example, for most software initiatives, 40% to 50% of the effort of the project is avoidable rework resulting from defects introduced into the product[1]. On average, roughly 60% of those defects are introduced in requirements stages[2].

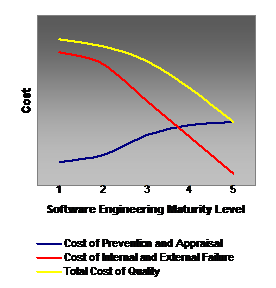

- Thus, the further along the software engineering capability maturity model you can get, the lower your costs will be. This is illustrated in Figure 2. Unlike some proponents of a lower-level of maturity to find an optimal cost,

Figure 2: Overall cost of software quality reaches its minimum at the highest level of maturity.I believe that the highest level of maturity does not come at infinite cost, but rather at a finite cost (the blue line) that, while being higher than the cost of maintaining a lower maturity level, is nonetheless substantially less than the costs of failure.

Figure 2: Overall cost of software quality reaches its minimum at the highest level of maturity.I believe that the highest level of maturity does not come at infinite cost, but rather at a finite cost (the blue line) that, while being higher than the cost of maintaining a lower maturity level, is nonetheless substantially less than the costs of failure.

Getting to the highest level of software engineering maturity means having processes and practices in place that (a) prevent the introduction of defects in the first place, and (b) if they do get introduced (no one is perfect), detect them almost immediately after they are introduced so that repair can happen at once. As stated earlier, while traditional testing is an important part of this set of processes and practices (falling into the category of “Prevention and Appraisal”), it is not the most important part of the overall engineering discipline, as by the time defects are detected then, it is too late. - All software initiatives go through the stages of

- conceptualise,

- develop requirements,

- design,

- construct,

- accept and deploy, and

- maintain and sunset.

While some of these stages may overlap, be grouped together, and / or called different things depending on the development paradigm, nonetheless, key aspects and decisions in each of the above archetypal stages are present in every software development effort.

Going forward from these premises, we then take a look at what it is we need to measure in order to achieve high software quality before we get to testing. Metrics alone will not do this. But what they do do is point us in the direction of what we need to change, and how, and they then help to tell us how much better things are once we’ve made the right changes, or conversely what to back away from if the metrics “go south” after a change.

Read Part 2 — What to Measure.

[1] “Software Defect Reduction Top 10 List”, Barry Boehm, Victor R. Basili, Software Management, January 2001. Of note, a 2002 Construx Software Builders, Inc. keynote presentation puts this proportion as high as 80% for the average software project.

[2] Optimize Quality for Business Outcomes / A Practical Approach to Software Testing, Adreas Golze, Charlie Li, Shel Prince, A Mercury Press Publication, 2006, Pg. 4, from a 2002 study conducted by the National Institute of Science and Technology, RTI Project 7007.001.

- Shawn D. Sheridan's blog

- Login to post comments

-